World Order

Archive

60 results

![]() By Oscar Berry

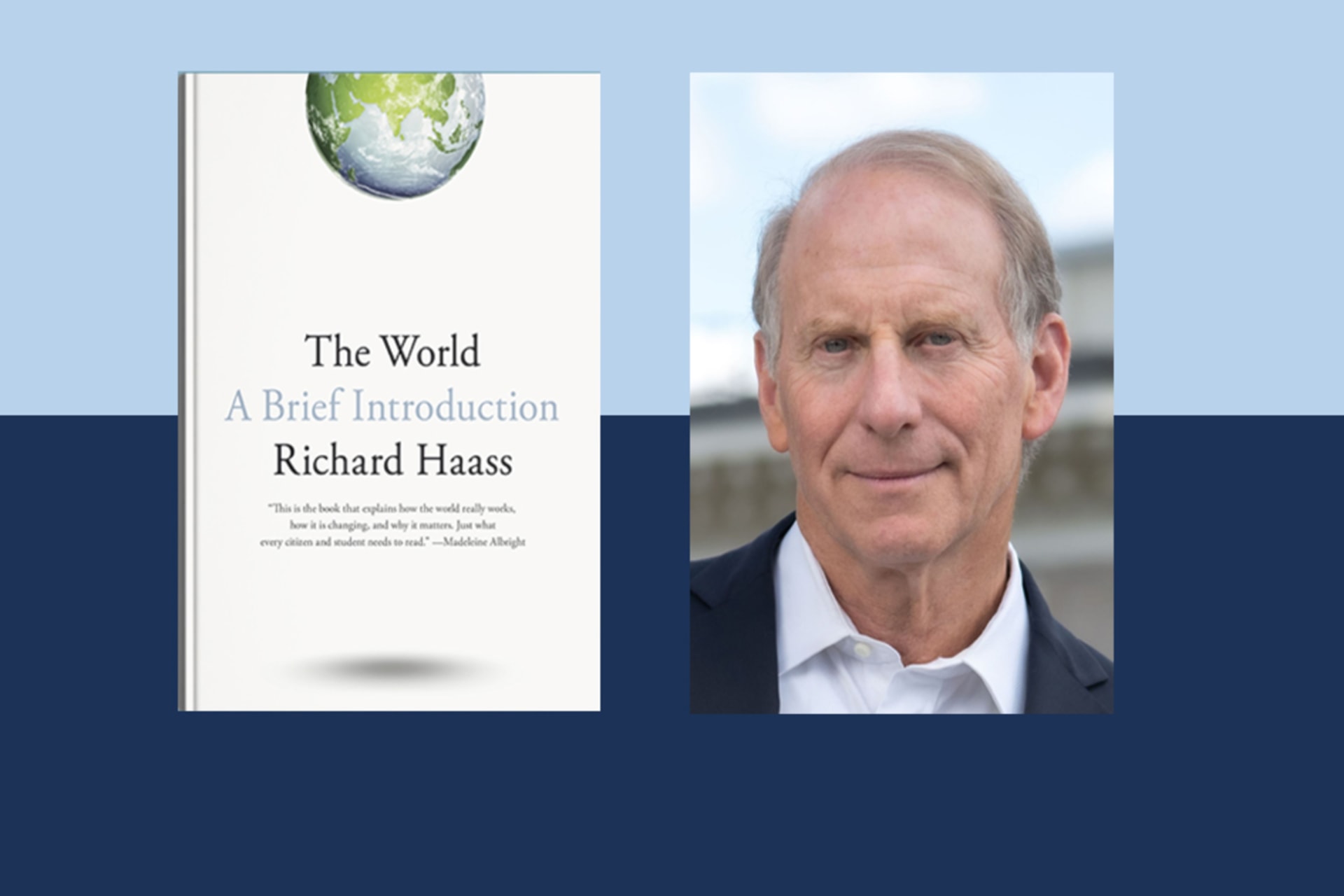

By Oscar Berry![]() By Richard Haass

By Richard Haass![]() By Stewart M. Patrick

By Stewart M. Patrick![]() By Stewart M. Patrick

By Stewart M. Patrick![]() By Richard Haass and Charles A. Kupchan

By Richard Haass and Charles A. Kupchan![]() By Jennifer Hillman and David Sacks

By Jennifer Hillman and David Sacks![]() Meeting

Meeting![]() By Stewart M. Patrick

By Stewart M. Patrick![]() By Stewart M. Patrick

By Stewart M. Patrick![]() By Richard Haass

By Richard Haass![]() By Richard Haass

By Richard Haass![]() By Richard Haass

By Richard Haass![]() By Stewart M. Patrick

By Stewart M. Patrick![]() By Richard Haass

By Richard Haass